[Preprint] On the Perceptual Uncertainty of Neural Networks

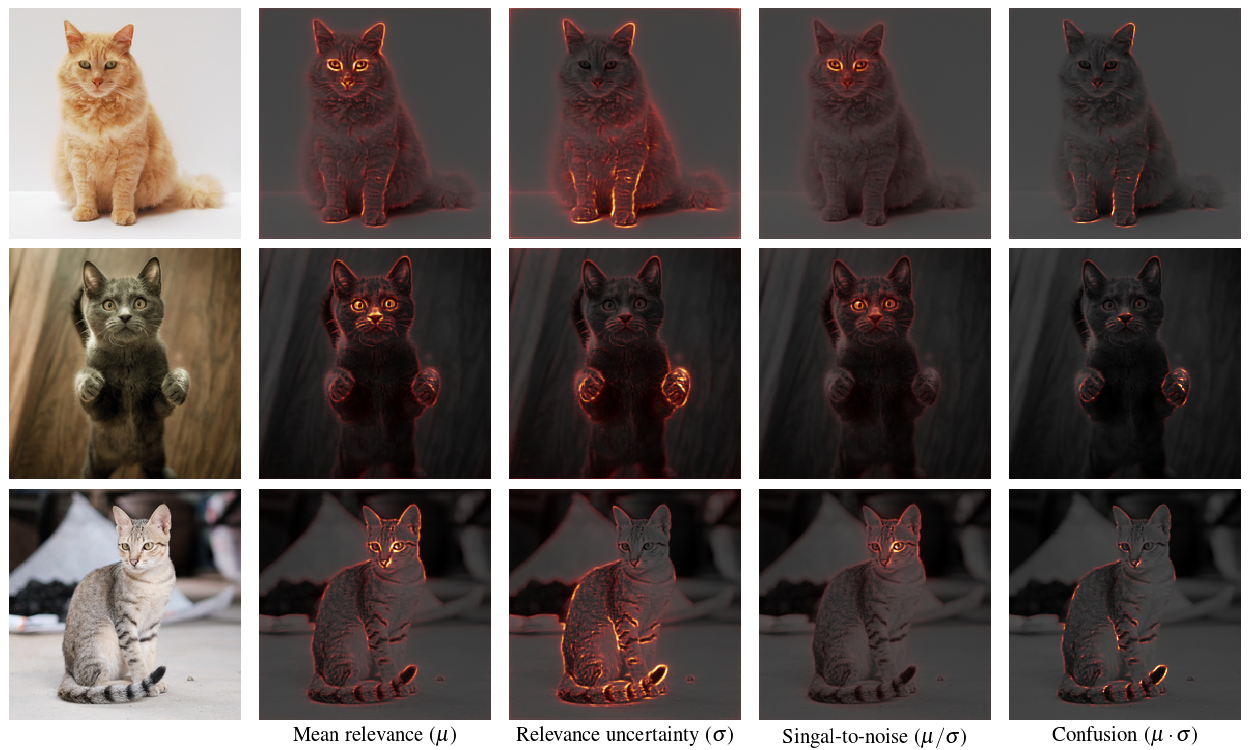

TL;DR: Neural networks can be unsure about what they perceive. We introduce a new method called Monte Carlo Relevance Propagation that allows to highlight the network’s perceptual uncertainty.

Abstract

Understanding the perceptual uncertainty of neural networks is key for the deployment of intelligent systems in real world applications. For this reason, we introduce in our new paper a method called Monte Carlo Relevance Propagation (MCRP) for feature relevance uncertainty estimation. A simple but powerful method based on Monte Carlo estimation of the feature relevance distribution to compute feature relevance uncertainty scores that allow a deeper understanding of a neural network’s perception and reasoning.

You find the preprint here.

@article{fabi2020feature,

title={On Feature Relevance Uncertainty: A Monte Carlo Dropout Sampling Approach},

author={Fabi, Kai and Schneider, Jonas},

journal={arXiv preprint arXiv:2008.01468},

year={2020}

}